Artificial Intelligence is often described as neutral, objective, and data-driven. In reality, AI systems reflect the values, assumptions, and power structures embedded in the data they are trained on and the goals they are designed to optimize. As AI becomes deeply integrated into hiring, lending, healthcare, education, policing, advertising, and pricing, it has begun to discriminate at scale, often invisibly and with far-reaching consequences.

AI-driven discrimination does not usually arise from malicious intent. Instead, it emerges from biased data, flawed objectives, and unequal feedback loops. When historical data reflects social inequality—differences in income, race, geography, gender, or access—AI systems trained on that data learn to reproduce and even amplify those disparities. This is especially dangerous because algorithmic decisions often appear authoritative and unquestionable.

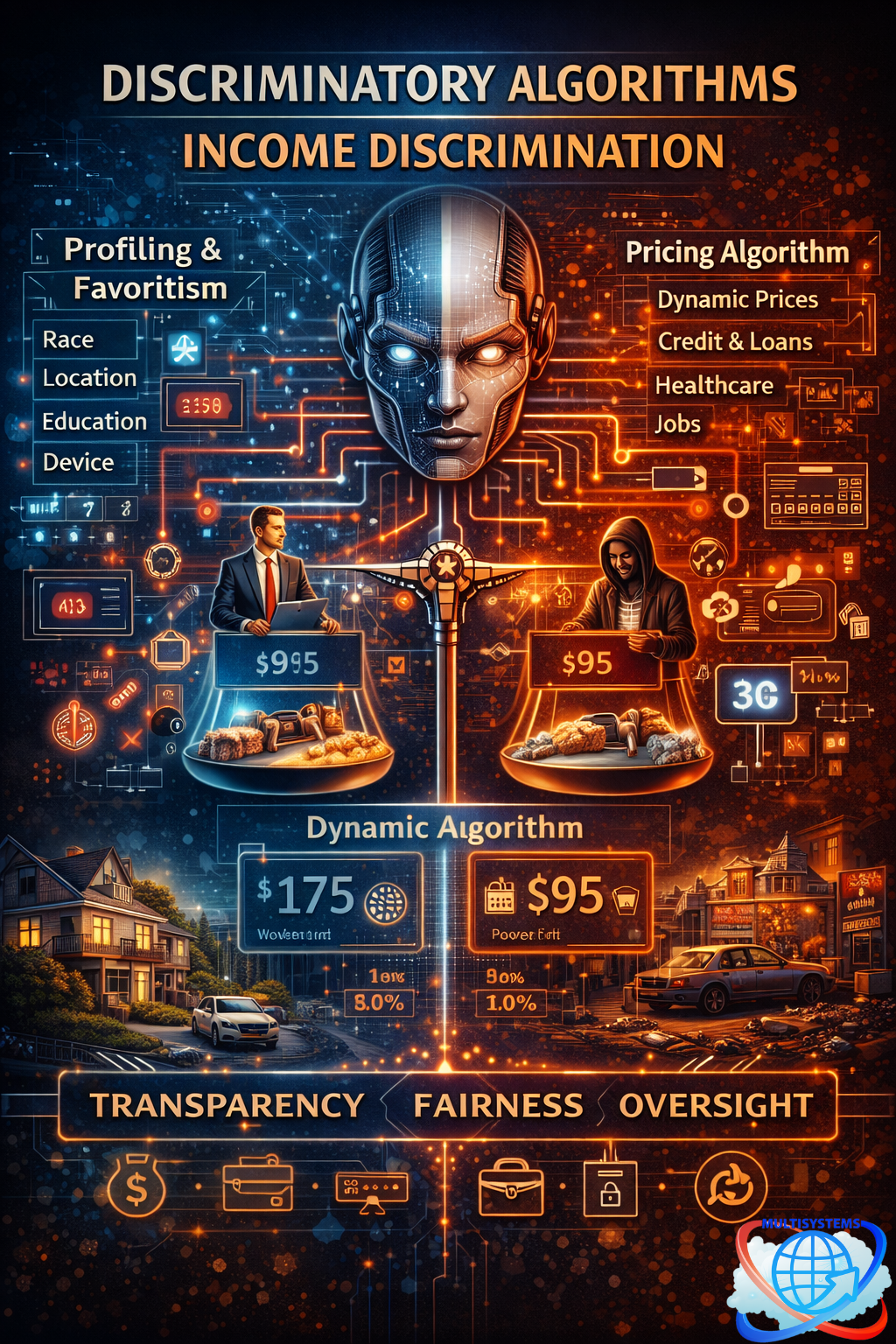

One of the most powerful and least visible forms of AI-driven discrimination occurs through profiling and favoritism. Algorithms categorize individuals based on behavioral data, purchasing patterns, location, device type, education signals, and inferred socioeconomic status. These profiles determine who sees certain job ads, who qualifies for loans, who gets premium services, who is surveilled more heavily, and who pays more for the same goods. Favoritism is no longer personal—it is computational.

This problem becomes even more severe when discrimination is embedded into pricing algorithms, which dynamically adjust prices based on perceived willingness or ability to pay. While often justified as “market efficiency,” dynamic pricing can quietly become income discrimination by design. If an algorithm learns that wealthier users tolerate higher prices, it will systematically charge them more. Conversely, if it learns that lower-income users are “less profitable,” it may restrict access, raise interest rates, or offer inferior options. Over time, this leads directly to what can be called an income algorithm—a system that sorts, prices, and limits opportunity based on economic profile rather than fairness or need.

These income algorithms do not operate in isolation. They feed into one another. Higher prices reduce purchasing power, which affects credit scores, access to education, healthcare outcomes, and employment opportunities. Those outcomes generate new data, which then reinforces the original algorithmic assumptions. This creates a self-reinforcing digital caste system, where mobility is constrained not by law, but by code.

Left unchecked, this dynamic risks extending into society as a whole, shaping neighborhoods, labor markets, political influence, and generational wealth. When AI systems are optimized solely for profit, efficiency, or risk reduction, human dignity and equity are treated as externalities.

To avert this algorithmic crisis, action is required on multiple levels:

First, algorithmic transparency and auditability must become standard. High-impact AI systems—especially those affecting pricing, credit, employment, healthcare, and public services—should be independently audited for bias, disparate impact, and feedback loops.

Second, fairness constraints must be embedded into model objectives, not added as an afterthought. AI should be optimized not only for profit or accuracy, but also for equity, access, and social impact.

Third, human oversight must remain mandatory. Automated decisions affecting people’s lives should always include meaningful human review and appeal mechanisms.

Fourth, data diversity and correction are essential. Training data should be actively examined, balanced, and corrected to prevent historical injustice from becoming automated destiny.

Finally, public policy and governance must evolve with technology. Governments, regulators, and civil society must treat algorithmic systems as social infrastructure—subject to ethical standards, accountability, and public interest safeguards.

AI has the power to either entrench inequality or dismantle it. The difference lies not in the technology itself, but in the choices society makes about how it is designed, deployed, and governed. If left purely to market forces, algorithms will optimize for profit. If guided by ethical intent and collective responsibility, they can instead become tools for fairness, inclusion, and shared prosperity.

The future is not being written by humans alone—but it is still being chosen by them.